Week 12

Large Language Models: Theory and Fine-tuning a Transformers-based model (Invited Speaker Dr. Sebastian Vallejo)

Topics

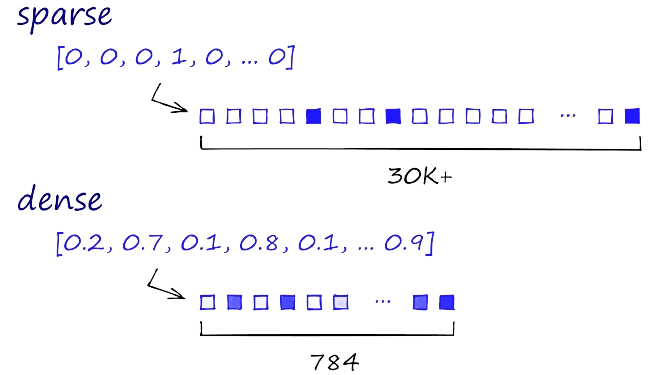

- We will learn about the Transformers architecture, attention, and the encoder-coder infrastructure.

Readings

Required Readings

[SLP] - Chapter 10.

Jay Alammar. 2018. “The Illustrated Transformer.” https://jalammar.github.io/illustratedtransformer/

Vaswani, A., et al. (2017). Attention is all you need. Advances in neural information processing systems, 30;

Timoneda and Vera, BERT, RoBERTa or DeBERTa? Comparing Performance Across Transformer Models in Political Science Text, Forthcoming Journal of Politics.

Class Materials

- Code here: https://svallejovera.github.io/cpa_uwo/week-9-transformers.html